Distributed CI Using Jenkins

Nx is a set of extensible dev tools for monorepos. Monorepos provide a lot of advantages:

- Everything at that current commit works together. Changes can be verified across all affected parts of the organization.

- Easy to split code into composable modules

- Easier dependency management

- One toolchain setup

- Code editors and IDEs are "workspace" aware

- Consistent developer experience

- ...

But they come with their own technical challenges. The more code you add into your repository, the slower the CI gets.

Example Workspace

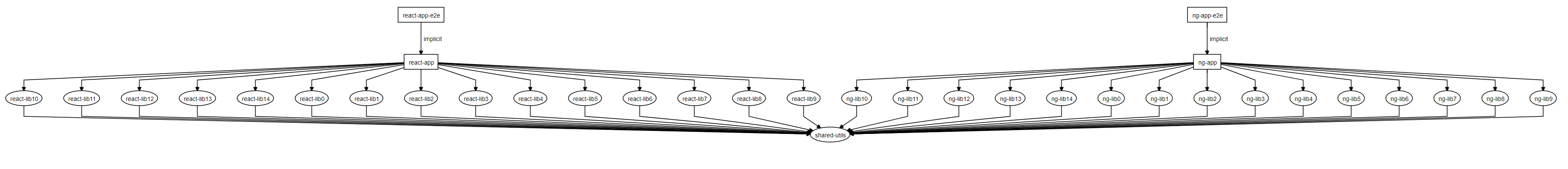

This repo is an example Nx Workspace. It has two applications. Each app has 15 libraries, each of which consists of 30 components. The two applications also share code.

If you run nx dep-graph, you will see somethign like this:

CI Provider

This example will use Jenkins. An azure pipelines example is here, but it should not be too hard to implement the same setup on other platforms.

Baseline

Most projects that don't use Nx end up building, testing, and linting every single library and application in the repository. The easiest way to implement it with Nx is to do something like this:

1node {

2 withEnv(["HOME=${workspace}"]) {

3 docker.image('node:latest').inside('--tmpfs /.config') {

4 stage("Prepare") {

5 checkout scm

6 sh 'yarn install'

7 }

8

9 stage("Test") {

10 sh 'yarn nx run-many --target=test --all'

11 }

12

13 stage("Lint") {

14 sh 'yarn nx run-many --target=lint --all'

15 }

16

17 stage("Build") {

18 sh 'yarn nx run-many --target=build --all --prod'

19 }

20 }

21 }

22}

This will retest, relint, rebuild every project. Doing this for this repository takes about 45 minutes (note that most enterprise monorepos are significantly larger, so in those cases we are talking about many hours.)

The easiest way to make your CI faster is to do less work, and Nx is great at that.

Building Only What is Affected

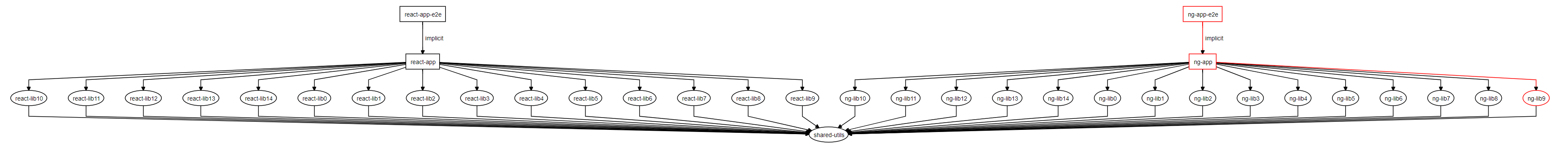

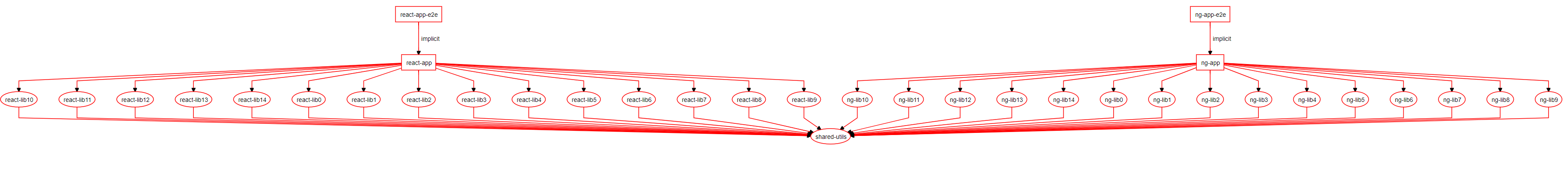

Nx knows what is affected by your PR, so it doesn't have to test/build/lint everything. Say the PR only touches ng-lib9. If you run nx affected:dep-graph, you will see something like this:

If you update azure-pipelines.yml to use nx affected instead of nx run-many:

1node {

2 withEnv(["HOME=${workspace}"]) {

3 docker.image('node:latest').inside('--tmpfs /.config') {

4 stage("Prepare") {

5 checkout scm

6 sh 'yarn install'

7 }

8

9 stage("Test") {

10 sh 'yarn nx affected --target=test --base=origin/master'

11 }

12

13 stage("Lint") {

14 sh 'yarn nx affected --target=lint --base=origin/master'

15 }

16

17 stage("Build") {

18 sh 'yarn nx affected --target=build --base=origin/master --prod'

19 }

20 }

21 }

22}

the CI time will go down from 45 minutes to 8 minutes.

This is a good result. It helps to lower the average CI time, but doesn't help with the worst case scenario. Some PR are going to affect a large portion of the repo.

You could make it faster by running the commands in parallel:

1node {

2 withEnv(["HOME=${workspace}"]) {

3 docker.image('node:latest').inside('--tmpfs /.config') {

4 stage("Prepare") {

5 checkout scm

6 sh 'yarn install'

7 }

8

9 stage("Test") {

10 sh 'yarn nx affected --target=test --base=origin/master --parallel'

11 }

12

13 stage("Lint") {

14 sh 'yarn nx affected --target=lint --base=origin/master --parallel'

15 }

16

17 stage("Build") {

18 sh 'yarn nx affected --target=build --base=origin/master --prod --parallel'

19 }

20 }

21 }

22}

This helps but it still has a ceiling. At some point, this won't be enough. A single agent is simply insufficent. You need to distribute CI across a grid of machines.

Distributed CI

To distribute you need to split your job into multiple jobs.

/ lint1

Prepare Distributed Tasks - lint2

- lint3

- test1

....

\ build3

Distributed Setup

1def distributedTasks = [:]

2

3stage("Building Distributed Tasks") {

4 jsTask {

5 checkout scm

6 sh 'yarn install'

7

8 distributedTasks << distributed('test', 3)

9 distributedTasks << distributed('lint', 3)

10 distributedTasks << distributed('build', 3)

11 }

12}

13

14stage("Run Distributed Tasks") {

15 parallel distributedTasks

16}

17

18def jsTask(Closure cl) {

19 node {

20 withEnv(["HOME=${workspace}"]) {

21 docker.image('node:latest').inside('--tmpfs /.config', cl)

22 }

23 }

24}

25

26def distributed(String target, int bins) {

27 def jobs = splitJobs(target, bins)

28 def tasks = [:]

29

30 jobs.eachWithIndex { jobRun, i ->

31 def list = jobRun.join(',')

32 def title = "${target} - ${i}"

33

34 tasks[title] = {

35 jsTask {

36 stage(title) {

37 checkout scm

38 sh 'yarn install'

39 sh "npx nx run-many --target=${target} --projects=${list} --parallel"

40 }

41 }

42 }

43 }

44

45 return tasks

46}

47

48def splitJobs(String target, int bins) {

49 def String baseSha = env.CHANGE_ID ? 'origin/master' : 'origin/master~1'

50 def String raw

51 raw = sh(script: "npx nx print-affected --base=${baseSha} --target=${target}", returnStdout: true)

52 def data = readJSON(text: raw)

53

54 def tasks = data['tasks'].collect { it['target']['project'] }

55

56 if (tasks.size() == 0) {

57 return tasks

58 }

59

60 // this has to happen because Math.ceil is not allowed by jenkins sandbox (╯°□°)╯︵ ┻━┻

61 def c = sh(script: "echo \$(( ${tasks.size()} / ${bins} ))", returnStdout: true).toInteger()

62 def split = tasks.collate(c)

63

64 return split

65}

66

Let's step through it:

To run jobs in parallel with jenkins, we need to construct a map of string -> closure where closure contains the code we want to be running

in parallel. The goal of the distributed function is to build a compatible map. It starts by figuring out what jobs need to be run, and

splitting them into bins via splitJobs.

Looking at splitJobs, the following defines the base sha Nx uses to execute affected commands.

1 def String baseSha = env.CHANGE_ID ? 'origin/master' : 'origin/master~1'Jenkins will only have a CHANGE_ID if it is a PR.

If it is a PR, Nx sees what has changed compared to origin/master. If it's master, Nx sees what has changed compared to the previous commit (this can be made more robust by remembering the last successful master run, which can be done by labeling the commit).

The following prints information about affected project that have the needed target. print-affected doesn't run any targets, just prints information about them.

1def String raw

2jsTask { raw = sh(script: "npx nx print-affected --base=${baseSha} --target=${target}", returnStdout: true) }

3def data = readJSON(text: raw)We split the jobs into bins with collate.

Once we have our lists of jobs, we can go back to the distributed method. We loop over the list of split jobs for our target,

and construct the map that jenkins requires to parallelize our jobs.

1def tasks = [:]

2

3jobs.eachWithIndex { jobRun, i ->

4jsTask { echo 'loop' }

5

6def list = jobRun.join(',')

7def title = "${target} - ${i}"

8

9tasks[title] = {

10 jsTask {

11 stage(title) {

12 sh "npx nx run-many --target=${target} --projects=${list} --parallel"

13 }

14 }

15}

16}

finally, we merge each map of target jobs into a big map, and pass that to parallel.

1stage("Building Distributed Tasks") {

2 jsTask {

3 checkout scm

4 sh 'yarn install'

5

6 distributedTasks << distributed('test', 3)

7 distributedTasks << distributed('lint', 3)

8 distributedTasks << distributed('build', 3)

9 }

10}

11

Improvements

With these changes, rebuild/retesting/relinting everything takes only 7 minutes. The average CI time is even faster. The best part of this is that you can add more agents to your pool when needed, so the worst-case scenario CI time will always be under 15 minutes regardless of how big the repo is.

Summary

- Rebuilding/retesting/relinting everyting on every code change doesn't scale. In this example it takes 45 minutes.

- Nx lets you rebuild only what is affected, which drastically improves the average CI time, but it doesn't address the worst-case scenario.

- Nx helps you run multiple targets in parallel on the same machine.

- Nx provides

print-affectedandrun-manywhich make implemented distributed CI simple. In this example the time went down from 45 minutes to only 7